Table of Contents

“Digital transformation” is often diluted into a buzzword, but for engineering leaders, it’s a concrete strategy to escape the gravitational pull of legacy systems. The goal isn’t a cosmetic tech upgrade; it’s a fundamental re-architecture of how you operate and deliver value. It’s the shift from building standalone hardware to creating connected, intelligent systems that reduce operational risk and accelerate speed to market.

For decision-makers in robotics, medical devices, or industrial automation, this isn’t an abstract IT initiative. It’s a targeted engineering mandate to solve persistent, costly business problems rooted in disconnected systems and a lack of real-time operational data. This operational drag manifests as delayed product launches, bloated R&D budgets, and a reactive, inefficient service model.

Redefining Digital Transformation for Engineering Outcomes

Effective Digital Transformation requires a precise diagnosis of the underlying issues that constrain your business. Many organizations are stuck treating symptoms—like excessive downtime or slow innovation cycles—without addressing the root cause.

Diagnosis of Core Systemic Constraints

Before prescribing a solution, a clear-eyed diagnosis is essential. These common failure modes are often interconnected:

- Problem: Reactive Maintenance Model. Operations are trapped in a break-fix cycle. Equipment failure triggers unplanned downtime, halting production and incurring significant direct and opportunity costs. The core issue is a lack of predictive insight into asset health.

- Problem: Constrained Innovation Velocity. Prototyping, testing, and iterating on new features is slow and expensive. While your team navigates legacy constraints, more agile competitors are capturing market share with faster release cadences.

- Problem: Post-Deployment Data Blindness. Once a product ships, it becomes a black box. There is no visibility into real-world usage patterns, performance degradation, or failure precursors. This prevents data-driven product improvement and proactive support.

- Problem: High Compliance Overhead. In regulated environments governed by standards like IEC 62304 for medical device software, manual documentation, and validation processes are slow, error-prone, and divert high-value engineering resources from innovation to paperwork.

These are not isolated technical issues; they are systemic business constraints that directly impact profitability and competitive positioning.

The Strategic Solution and Desired Outcome

The solution is not a monolithic technology overhaul. It’s the surgical application of digital transformation solutions to achieve specific, measurable business outcomes. This involves architecting an ecosystem where embedded systems, cloud platforms, and intelligent automation work in concert.

The objective is to convert static products into dynamic, data-generating assets that deliver continuous value. This integration enables predictive maintenance, remote diagnostics, and data-backed product evolution, creating a sustainable competitive advantage and unlocking new service-based revenue models.

The outcome is a fundamental shift in the business model—from reactive and transactional to proactive and data-driven. This transition from selling hardware to providing intelligent services is where market leaders are heading. According to Grand View Research, the global digital transformation market size was valued at USD 731.13 billion in 2022 and is projected to grow at a CAGR of 26.1% from 2023 to 2030. This growth underscores the urgency for adoption as a matter of competitive survival.

How Core Technologies Actually Drive Business Outcomes

Applying technology without a clear line of sight to a business outcome is a recipe for expensive failure. For an engineering leader, every architectural decision—from firmware design to cloud protocol selection—must be directly traceable to its impact on operational efficiency, risk reduction, or revenue generation. The focus must be on the outcome, not the technology itself.

The foundation of any intelligent device is its embedded system and firmware. This is where small architectural flaws can cascade into catastrophic field failures. A common failure mode is firmware designed as a static, monolithic block. When a device malfunctions post-deployment, the only recourse is often a costly product recall or an on-site technician visit, bleeding resources and eroding brand trust.

From Embedded Logic to Service Revenue

Now, consider the outcome when that same firmware is architected with secure Over-the-Air (OTA) update capabilities from day one. The static product is transformed into a manageable asset. This architectural choice immediately reduces post-launch support costs by enabling remote debugging, patching, and feature deployment. It directly mitigates the risk of costly field interventions and improves profit margins.

This leads to the next layer: cloud and IoT connectivity. The strategic goal is not merely to connect a device but to establish a secure and reliable data pipeline that converts raw operational telemetry into actionable business intelligence.

Use Case: Industrial Pump Manufacturer.

Problem: Customers experience costly, unplanned production shutdowns when pumps fail unexpectedly.

Diagnosis: The manufacturer has no visibility into asset health once a pump is installed.

Solution: IoT sensors are integrated to monitor vibration, temperature, and pressure in real-time. This data is streamed via a cellular gateway to a cloud platform, where a predictive maintenance algorithm identifies anomalies that signal impending bearing failure.

Outcome: The system alerts the customer before the failure occurs, allowing for scheduled maintenance. This prevents catastrophic downtime, reduces risk for the customer, and allows the manufacturer to sell a new high-margin “Predictive Maintenance” service, shifting from a transactional to a recurring revenue model.

Of course, this introduces new trade-offs. The design must account for data transmission costs, network latency in control-sensitive applications, and robust security protocols aligned with frameworks like the NIST Cybersecurity Framework to protect sensitive operational data. For a deeper dive, see our guide on building a successful industrial IoT solution.

Intelligence and Automation in Practice

Artificial Intelligence (AI) and automation translate this data into intelligent action. This moves the system from passive monitoring to active optimization and control.

Consider these practical applications tied to cost control and quality improvement:

- Machine Vision for Quality Control: An AI-powered camera on a manufacturing line inspects components for microscopic defects at a speed and accuracy unattainable by human inspectors. This drives scrap rates toward zero and prevents defective products from reaching customers.

- Algorithmic Path Optimization: For an autonomous mobile robot (AMR) in a warehouse, AI algorithms dynamically calculate the most efficient retrieval path, factoring in real-time congestion and order priority to increase throughput and lower operational costs.

Integrating these technologies requires a clear assessment of computational needs (edge vs. cloud), algorithm training data requirements, and the real-time constraints of the environment. A system requiring a hard real-time response, like a robotic controller, has vastly different architectural constraints than a soft real-time system used for inventory analysis. Each layer enables the next: robust firmware allows for reliable data collection, which fuels intelligent automation—driving measurable business growth.

Your Practical Implementation Roadmap

Executing a digital transformation without a disciplined, phased approach is a primary cause of failure. A structured roadmap is not about bureaucracy; it is a risk mitigation strategy that ensures every investment is tied to a validated business objective.

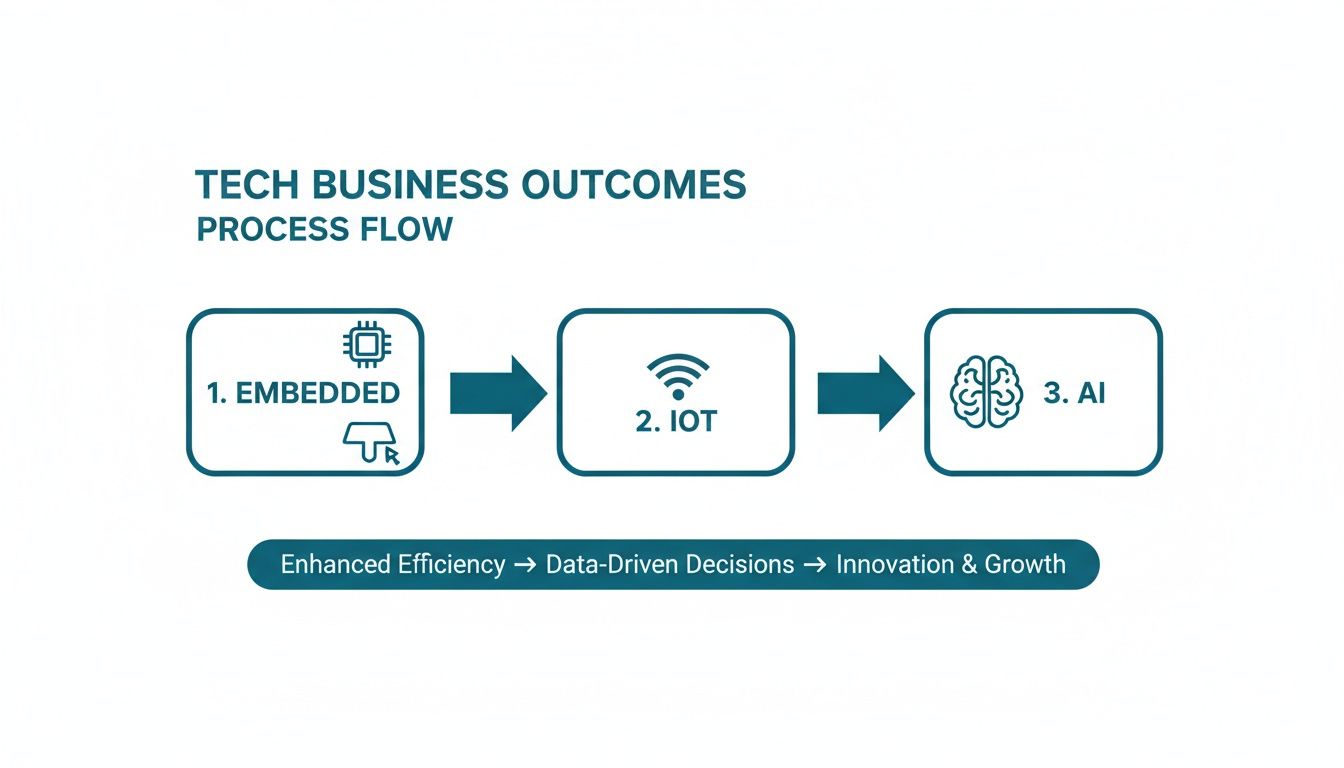

The diagram below illustrates the logical technology stack, from physical hardware to the intelligent insights that drive business outcomes.

This progression is critical: solid embedded engineering enables reliable IoT connectivity, which provides the data necessary to power AI-driven automation.

Stage 1: Assessment and Strategy

The most common failure mode is starting with a technology (“we need an AI solution”) instead of a business problem. The initial phase must be a rigorous, cross-functional effort between engineering, operations, and leadership to define a quantifiable business outcome.

A vague goal like “improve operational efficiency” is a recipe for scope creep and failure. A specific target like “reduce unplanned equipment downtime by 30% within 18 months” provides a clear north star for the entire project. This stage is about identifying the highest-value problem to solve first.

Stage 2: MVP and Prototyping

With a clear objective, the next step is to de-risk the core technical assumptions with a Minimum Viable Product (MVP). The goal is to answer the most critical questions with the smallest possible investment of time and capital.

For a predictive maintenance system, the MVP is not a factory-wide dashboard. It is a single sensor on one critical asset, proving you can reliably collect and transmit the necessary data.

A critical failure mode during this phase is scope creep. The purpose of an MVP is to learn, not to build a feature-complete product. Resist the temptation to add “just one more feature.” A tightly scoped MVP that validates a core assumption is infinitely more valuable than a bloated prototype that tries to do everything and validates nothing.

Stage 3: Integration and Testing

This is where architectural plans meet the complexity of the real world. Integrating new IoT gateways and firmware with legacy industrial controllers and enterprise software is rarely straightforward. Challenges include proprietary communication protocols, data format mismatches, and IT security constraints.

Rigorous, multi-layered testing is non-negotiable:

- Unit Testing: Verifying individual software and firmware components.

- Integration Testing: Ensuring new components communicate correctly with legacy systems.

- End-to-End System Testing: Validating the entire data flow, from sensor to alert.

Skipping these steps introduces significant downstream risk. A bug found in a lab environment is a minor cost; the same bug discovered post-deployment is a crisis that is exponentially more expensive to remediate.

Stage 4: Design for Manufacturability

A functional prototype is not a manufacturable product. Design for Manufacturability (DfM) and Design for Test (DFT) must be integrated into the process from the beginning, not treated as an afterthought.

This involves selecting components based on supply chain stability, not just performance specifications. It means designing enclosures for efficient assembly and field serviceability. Ignoring DfM leads to costly rework, production delays, and supply chain vulnerabilities. Selecting a microcontroller with a 52-week lead time can halt production entirely.

Stage 5: Scale and Optimization

With a validated and manufacturable solution, the focus shifts to controlled deployment and continuous improvement. The data collected post-launch is the critical feedback loop for optimizing algorithms, deploying firmware updates, and identifying new opportunities. This iterative cycle of data-driven optimization is what transforms a one-time project into a sustainable competitive advantage.

Seeing Digital Transformation in the Real World

Let’s apply the problem → diagnosis → solution → outcome framework to a concrete scenario in the highly regulated medical device industry.

The Problem: A Medical Device OEM Flying Blind

A mid-sized manufacturer of hospital infusion pumps produces reliable but disconnected devices. Once a pump leaves the factory, it becomes a black box. The OEM has zero visibility into its operational status, usage patterns, or potential failure modes. Clinicians must physically enter a patient’s room to verify infusion status, and all maintenance is reactive, triggered only after a device fails and disrupts patient care.

Diagnosing the Core Inefficiencies

This lack of connectivity creates significant business challenges:

- High Operational Costs: A substantial portion of the service budget is consumed by dispatching technicians for unscheduled field repairs, many of which were preventable.

- Limited Clinical Value: Without remote monitoring, clinicians cannot proactively address missed infusions, which can negatively impact patient outcomes.

- Transactional Revenue Model: The business model is limited to the one-time sale of hardware.

- Compliance Drag: Firmware updates require a burdensome, manual validation process to ensure compliance with standards like IEC 62304, slowing innovation.

The root cause is a lack of data. This data blindness makes proactive service, operational efficiency, and clinical innovation impossible.

Engineering a Connected Solution

The manufacturer partners with an engineering firm to develop a next-generation connected infusion pump. The solution involves a new embedded system designed for secure IoT connectivity. Custom firmware captures and transmits key operational data—infusion rates, device temperature, battery health, and error codes—to a secure cloud platform. A web-based dashboard provides clinicians with a centralized, real-time view of all pumps, enabling remote monitoring of infusion status while maintaining strict patient data privacy. The principles of remote asset tracking are transferable across industries, as seen in IoT in fleet management.

The Payoff: Measurable Business Outcomes

This digital transformation delivered quantifiable returns across the business.

The outcome was a fundamental shift from a reactive, hardware-centric model to a proactive, service-oriented one. By connecting their devices, the company unlocked new value for customers and created a sustainable competitive advantage.

The results were immediate and measurable:

- Reduced Risk: Predictive maintenance algorithms analyzing device telemetry led to a 40% reduction in unplanned downtime, enhancing patient safety and hospital operational efficiency.

- New Revenue Streams: The company now offers a subscription-based data and analytics platform to hospitals, creating a new, high-margin recurring revenue stream.

- Improved Patient Outcomes: Automated alerts for missed infusions allow for timely clinical intervention, directly improving the quality of care.

- Streamlined Compliance: The architecture supports secure Over-the-Air (OTA) firmware updates, enabling faster feature rollouts and significantly reducing the documentation burden for regulatory compliance.

Calculating ROI and Managing Implementation Risks

A digital transformation project must be underpinned by a credible business case. Vague promises of “efficiency” are insufficient. A robust financial model must connect technical investment directly to measurable business outcomes.

A comprehensive ROI model for digital transformation solutions must look beyond simple cost savings. It requires balancing upfront capital expenditures (CapEx) for hardware and software against long-term operational expenditures (OpEx) for cloud services and data management. Crucially, it must also quantify less obvious metrics like the value of accelerated time-to-market or the financial impact of risk reduction (e.g., the cost of a compliance failure or major downtime event that the new system prevents). This frames the initiative not as an expense, but as a strategic investment.

A Deeper Dive Into Implementation Risks

A strong ROI model is irrelevant if the project is derailed by unmanaged risks. The most significant threats are rarely isolated technology failures; they are systemic issues related to architecture, vendor dependencies, and security oversights.

The most significant implementation risks are often introduced long before the first line of code is written. Poor architectural decisions create a foundation of technical debt that compounds over the project’s lifecycle, making future adaptations exponentially more expensive and complex.

Effective risk management begins during the initial planning stages, as detailed in our guide to the discovery phase of a project. A disciplined framework for identifying, assessing, and mitigating core risks is essential for a successful implementation.

Framework For Proactive Risk Mitigation

A structured approach allows for targeted strategies that address the root cause of potential problems, not just the symptoms.

Risk Mitigation Framework For Digital Transformation Projects

This table outlines common risks in digital transformation initiatives and provides actionable mitigation strategies to shift from reactive problem-solving to proactive prevention.

| Risk Category | Potential Failure Mode | Mitigation Strategy |

|---|---|---|

| Technical Debt | A rigid, monolithic architecture makes future feature additions slow and costly, stifling innovation and increasing long-term maintenance burdens. | Adopt a modular, services-oriented architecture from day one. This allows individual components to be updated or replaced independently, containing the impact of changes and enabling faster, parallel development cycles. |

| Vendor Lock-In | Over-reliance on a single vendor’s proprietary hardware, software protocols, or cloud services creates high switching costs and limits future flexibility. | Prioritize the use of open standards and protocols (e.g., MQTT, OPC-UA) wherever possible. Design systems with clear abstraction layers that decouple your core application logic from vendor-specific implementations. |

| Cybersecurity | Insecure default configurations, lack of encrypted communication channels, or inadequate firmware update mechanisms create vulnerabilities in connected devices. | Implement a “secure by design” philosophy. This includes mandatory code reviews, penetration testing, and establishing a secure, authenticated process for Over-the-Air (OTA) updates, aligned with NIST cybersecurity guidelines. |

By proactively managing these risks, the project focus shifts from firefighting to strategic execution. Building resilience into the project’s architecture and processes protects the schedule and budget while securing the long-term value of the solution.

Choosing the Right Engineering Partner

The success of a complex digital transformation solution often hinges on the selection of the right engineering partner. This decision is not merely about sourcing technical skills; it’s about finding a team whose operational model aligns with your strategic objectives and project realities.

Traditional models, such as large consulting firms or individual freelancers, present significant trade-offs. Large firms often come with high overhead and slow processes, while a solo practitioner introduces a single point of failure and lacks the breadth to manage an end-to-end program. An effective partnership functions as a flexible, deeply skilled extension of your internal team, providing not just execution but strategic guidance through the integration of hardware, firmware, and software.

Evaluating Partnership Models

When vetting partners, scrutinize the operational model itself, as it has direct implications for budget, timeline, and risk. A common mistake is selecting a partner for a single technical skill without considering their capability for project management and cross-domain integration.

The best partnership model is one that molds itself to your project’s needs. It should deliver elite, specialized talent precisely when you need it, without forcing you to pay for idle bench time during the slower phases of development.

Key evaluation criteria include:

- Specialization vs. Generalization: Does the partner provide true domain experts for firmware, mechanical, and cloud engineering, or generalists who may lack the depth for mission-critical tasks?

- Accountability and Coordination: Is there a single, accountable program lead orchestrating all technical disciplines? A collection of uncoordinated contractors is a recipe for integration failure.

- Scalability and Agility: Can the partner scale resources up or down in response to project demands? This flexibility is essential for budget control and meeting deadlines.

The Dynamic Expert Network Advantage

The Dynamic Expert Network model offers a superior alternative. Instead of assigning available bench staff, this model assembles a bespoke team of elite, pre-vetted specialists on-demand, precisely matched to the requirements of each project phase.

During initial architecture, a systems architect and a Design for Manufacturability (DfM) expert are engaged. Later, for bootloader optimization, a deeply specialized firmware engineer joins the team. This ensures optimal talent is applied to each specific challenge. A single, accountable program lead coordinates the entire effort, owning the end-to-end outcome. This model accelerates timelines and maximizes ROI by delivering precisely the expertise required, exactly when it is needed.

Frequently Asked Questions

Practical questions from engineering leaders on implementing digital transformation solutions.

How Do We Start Without A Huge Upfront Budget?

Avoid the “big bang” approach of a complete overhaul. Start with a tightly-scoped pilot project or MVP focused on a single, high-impact business problem. For example, instrument one critical piece of machinery for predictive maintenance rather than the entire factory. This validates the technology and demonstrates tangible ROI with contained risk and minimal initial investment.

What Is The Biggest Mistake Companies Make?

The most common failure is treating digital transformation as a technology project instead of a business strategy enabled by technology. Success is not defined by deploying a new cloud platform; it is defined by business outcomes—a 20% reduction in production defects or a three-month acceleration in time-to-market.

The entire initiative has to be driven by business goals. Technology is just the tool you use to get there. Projects that don’t have clear, measurable business KPIs from day one almost always drift off course and fail to deliver any real value.

How Does A Dynamic Expert Network Differ From A Traditional Firm?

A traditional firm assigns available staff. A Dynamic Expert Network starts with your project’s specific needs and assembles a custom team of elite, pre-vetted specialists to match. This provides world-class expertise in domains like firmware, RF design, or DfM precisely when needed, without the cost of unutilized resources. This model delivers superior agility, deeper expertise, and greater cost efficiency, all coordinated by a single program lead who owns the outcome. Exploring various outsourcing development team solutions can be a strategic way to access this specialized, on-demand talent.

At Sheridan Technologies, we de-risk complex engineering projects by deploying precisely the expertise you need, when you need it. If you are ready to move from planning to execution with a partner who understands both the technical and business imperatives, let’s connect.

Schedule a complimentary assessment with our engineering leads today.